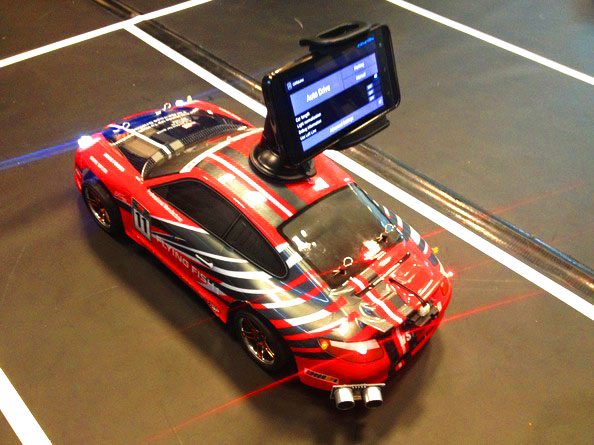

A self-driving vehicle using image recognition on Android

Dimitri Platis is a software engineer who’s been working with his team on an Android-based self-driving vehicle which uses machine vision algorithms and techniques as well as data from the on-board sensors, in order to follow street lanes, perform parking manoeuvres and overtake obstacles blocking its path:

The innovational aspect of this project, is first and foremost the use of an Android phone as the unit which realizes the image processing and decision making. It is responsible for wirelessly transmitting instructions to an Arduino Mega, that controls the physical aspects of the vehicle. Secondly, the various hardware components (i.e. sensors, motors etc) are programmatically handled in an object oriented way, using a custom made Arduino library, which enables developers without background in embedded systems to trivially accomplish their tasks, not caring about lower level implementation details.

[…]

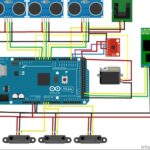

On the software dimension of the physical layer, an Arduino library was created (based on a previous work of mine [1], [2]) which encapsulated the usage of the various sensors and permits us to handle them in an object oriented manner. The API, sports a high abstraction level, targeting primarily novice users who “just want to get the job done”. The components exposed, should however also be enough for more intricate user goals. The library is not yet 100% ready to be deployed out of the box in different hardware platforms, as it was built for an in house system after all, however with minor modifications that should not be a difficult task. This library was developed to be used with the following components in mind: an ESC, a servo motor for steering, HC-SR04 ultrasonic distance sensors, SHARP GP2D120 infrared distance sensors, an L3G4200D gyroscope, a speed encoder, a Razor IMU. Finally, you can find the sketch running on the actual vehicle here. Keep in mind that all decision making is done in the mobile device, therefore the microcontroller’s responsibility is just to fetch commands, encoded as Netstrings and execute them, while fetching sensor data and transmitting them.

Check the Arduino library on Github, explore the circuit below and enjoy the car in the video:

Here’s the essential bill of materials:

- Electronic Speed Controller (ESC)

- Servo motor (Steering wheel)

- Speed encoder

- Ultrasonic sensors (HC-SR04, SRF05)

- Infrared distance sensors (SHARP GP2D120)

- Gyroscope (L3G4200D)

- 9DOF IMU (Razor IMU)

July 17th, 2015 at 01:28:18

Hi, I had exactly the idea, which I implemented in a project. I would like to collaborate.

July 22nd, 2015 at 21:07:05

Hi, I am working on a similar project, where the car (wheeled robotic arm) will have an android device doing “object recognition” to sort and place recognized objects using opencv. I am not very proficient at opencv. Do you have the android (C, Java) code or examples to share?. I am struggling a bit between recognition of “matched” objects and object avoidance in general. Great approach on the arduino side!! (I implemented a serial comm protocol but yours is way more elegant)

July 27th, 2015 at 22:25:32

@danielhdz try looking for blob detection in OpenCV

January 30th, 2017 at 21:21:51

[…] Our vehicle was featured in major maker websites, such as Makezine, Atmel Corporation, the Arduino blog, Huffington Post [FR], Popular Mechanics and Omicrono among […]