This device converts gestures into keystrokes

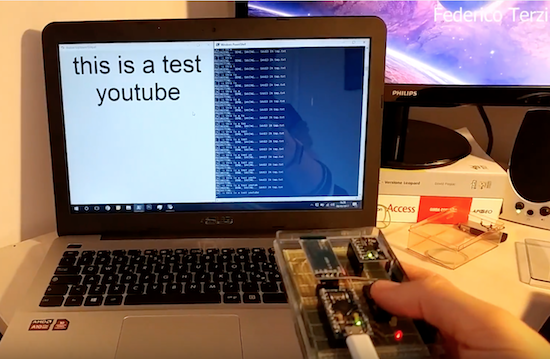

Engineering student Federico Terzi has built an impressive computer interface device reminiscent of a Wiimote.

When talking in person, you can express meaning using facial expressions, and your hands. Usually this acts to add emphasis to a statement or perhaps to point out a certain object, but what if you could actually type letters based on how your hands move?

Terzi’s aptly named “Gesture Keyboard” does just this, using an Arduino Pro Micro, an MPU-6050 accelerometer, and an HC-06 Bluetooth module for sending signals to his laptop. A Python library using Scikit-learn’s SVM (Support Vector Machine) algorithm then translates the motion readings into characters that appear on the screen.

You can find the code and more information on Terzi’s GitHub page.