Using Arduino UNO to sync a visual neuroscience lab

Common research methods to study the visual system in the laboratory include recording and monitoring neural activity in the presence of sensory stimuli, to help scientists study how neurons encode and respond, for example, to specific visual inputs.

One of the biggest technical problems in the neural recording setups used in such experiments, is achieving precise synchronization of multiple devices communicating with each other, including microscopes and screens displaying the stimuli, to accurately map neural responses to the visual events.

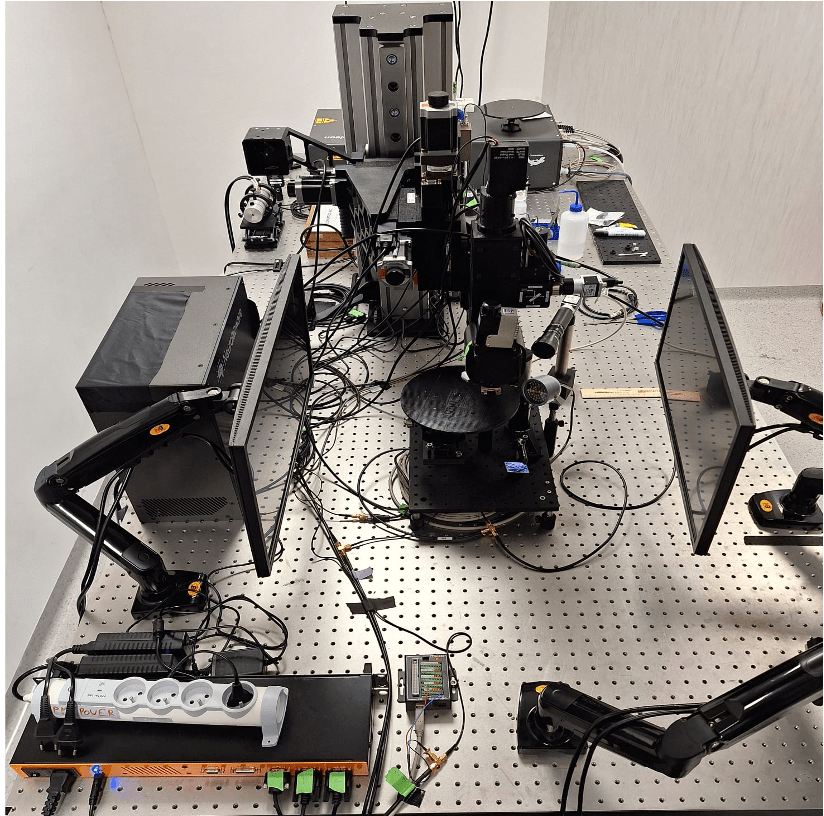

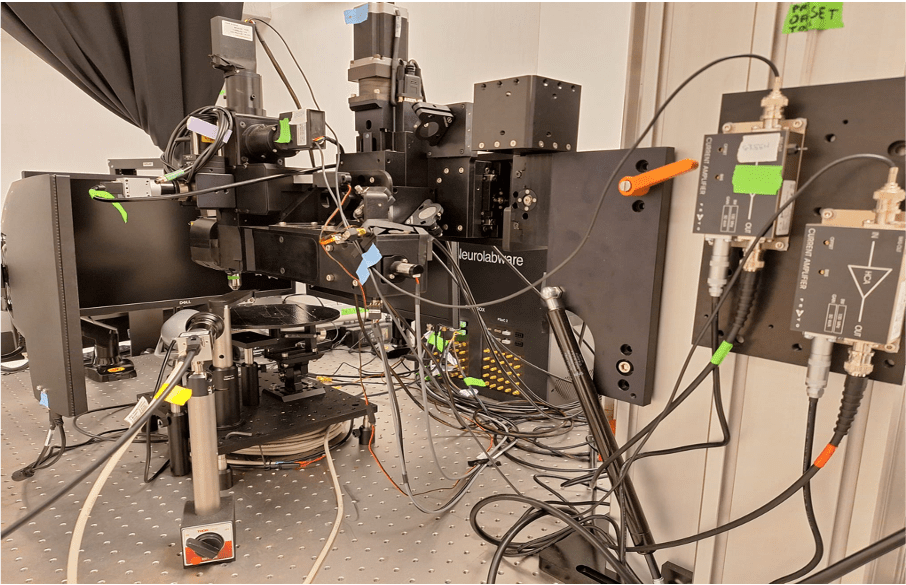

For example, in the Rompani Lab, a visual neuroscience laboratory at the European Molecular Biology Laboratory (EMBL) in Rome, the recording system (a two-photon microscope) needs to communicate with the visual stimulation system (composed of two screens) that are used to show visual stimuli while recording neural activity. To synchronize these systems efficiently, they turned to an Arduino UNO Rev3. “Its simplicity, reliability, and ease of integration made it an ideal tool for handling the timing and communication between different devices in the lab,” says Pietro Micheli, PhD student at EMBL Rome.

How the setups works

The Arduino UNO Rev3 is used to signal to the microscope when the stimulus (which is basically just a short video) starts and when it ends. While the microscope is recording and acquiring frames, a simple firmware tells the UNO to listen to the data stream on a COM port of the computer used to control the visual stimulation.

Within the Python® script used for controlling the screens, every time a new stimulus starts a command is written on the serial port. The microcontroller reads the command, which can be either ‘H’ or ‘L’, and sets the voltage of the output TTL at pin 9 to 5V or 0V, respectively. This TTL signal goes to the microscope controller, which generates time stamps for the microscope status. These timestamps contain the exact frame numbers of the microscope recording at which the stimulus started (rising edge of the TTL) and ended (falling edge of the TTL).

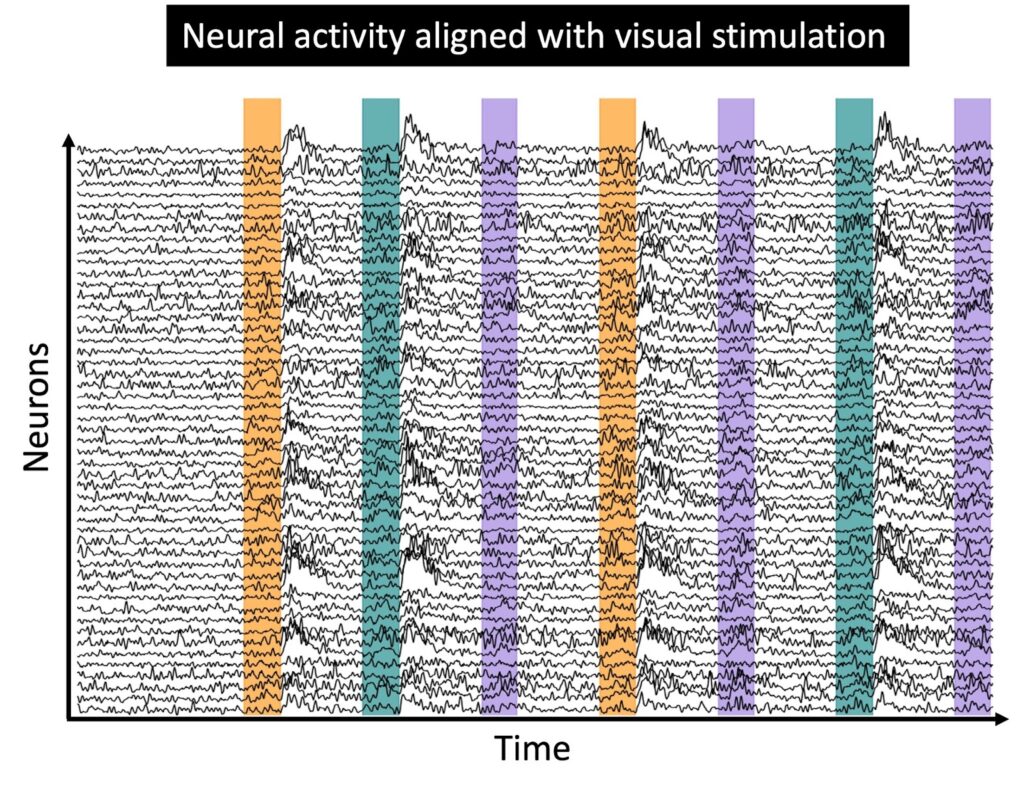

All this information is essential for the analysis of the recording, as it allows the researchers at EMBL Rome to align the neural responses recorded to the stimulation protocol presented. Once the neural activity is aligned, the downstream analysis can begin, focusing on understanding the deeper brain activity.

Ever wonder what neurons that are firing look like?

Micheli shared with us an example of the type of neural activity acquired during an experimental session with the setup described above.

The small blinking dots are individual neurons recorded from the visual cortex of an awake, behaving mouse. The signal being monitored is the fluorescence of a particular protein produced by neurons, which indicates their activity level. After the light emitted by the neurons has been recorded and digitised, researchers extract fluorescence traces for each neuron. At this point, they can proceed with the analysis of the neural activity, to try to understand how the visual stimuli shown are actually encoded by the recorded neural population.