Tapping without seeing: Making touchscreens accessible

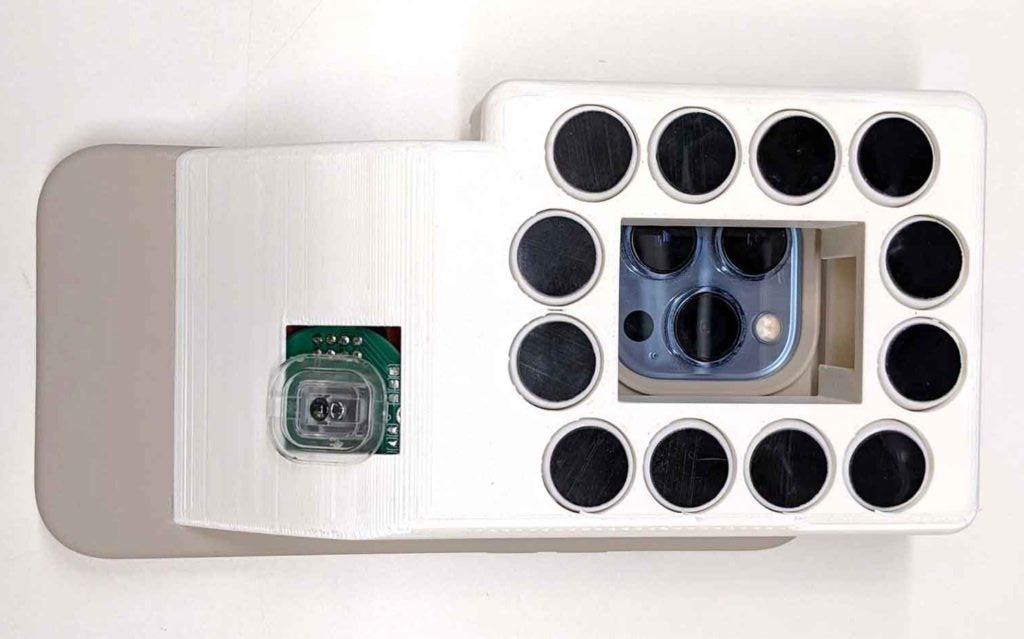

Modern devices rely heavily on touchscreens because they allow for dynamic interfaces that aren’t possible with conventional tactile buttons. But those interfaces present an issue for people with certain disabilities. A person with vision loss, for example, might not be able to see the screen’s content or its virtual buttons at all. To make touchscreens more accessible, a team of engineers from the University of Michigan developed this special phone case called BrushLens.

This case expands a smartphone to add a matrix of actuators or capacitive touch simulator pads. The former work with all forms of touchscreens (including resistive), while the latter only work with capacitive touchscreens — though those are the most common type today. The smartphone’s own camera and sensors let it detect its position on a larger touchscreen, so it can guide a user to a virtual button and then press that button itself.

The prototype hardware includes an Arduino Nano 33 IoT board to control the actuators and/or capacitive touch points. It receives its commands from the smartphone via Bluetooth® Low Energy.

For that to work, the smartphone must understand the target touchscreen and communicate the content to the user. That communication is possible using existing text-to-voice techniques, but analyzing target touchscreens is more difficult. Ideally, UI designers would include some sort of identifier so the user’s smartphone can query screen content and button positions. However, that is an added expense and would require rebuilds of existing interfaces. For that reason, BrushLens includes some ability to analyze touchscreens and their content.

This is a very early prototype, but the concept has a great deal of potential for making a world full of touchscreens more accessible to those living with disabilities.

Image credit: Chen Liang, doctoral student, University of Michigan’s Computer Science and Engineering