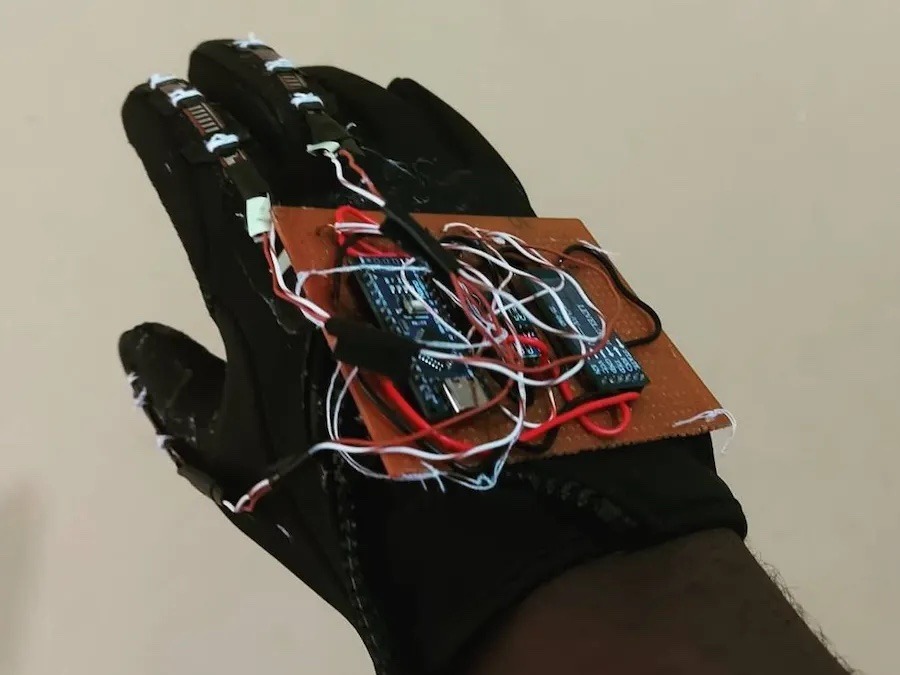

This glove translates sign language using an array of sensors

For people not familiar with American Sign Language (ASL), being able to recognize what certain hand motions and positions mean is a nearly impossible task. To make this process easier, Hackster.io user ayooluwa98 came up with the idea to integrate various motion, resistive, and touch sensors into a single glove that could convert these signals into understandable text and speech.

The system is based around a single Arduino Nano board, which is responsible for taking in sensor data and outputting the phrase that best matches the inputs. The orientation of the hand is ascertained by reading values from the X, Y, and Z axes of a single accelerometer and applying a small change based upon prior calibration. Meanwhile, resistive flex sensors spanning the length of each finger produce a different voltage level according to the bend’s extent.

At each iteration of the program’s main loop, a series of Boolean statements are evaluated to pick the phrase that best matches the current finger bends and hand orientation, and this data is then outputted via the UART pins to an attached Bluetooth® HC-05 module. The final component is a connected phone running a custom app that takes the incoming words from Bluetooth® and saves them for text-to-speech output when the button is pressed.

To see more about this project, you can read ayooluwa98’s write-up here on Hackster.io.