See how Nikodem Bartnik integrated LIDAR room mapping into his DIY robotics platform

As part of his ongoing autonomous robot project, YouTuber Nikodem Bartnik wanted to add LIDAR mapping/navigation functionality so that his device could see the world in much greater resolution and actively avoid obstacles. In short, LIDAR works by sending out short pulses of invisible light and measuring how much time it takes for the beam to reflect off an object and return to its detector. By combining this distance value with the angle of the sensor at the moment of measurement, a virtual cloud of points can be built and used to represent the entire space around the robot.

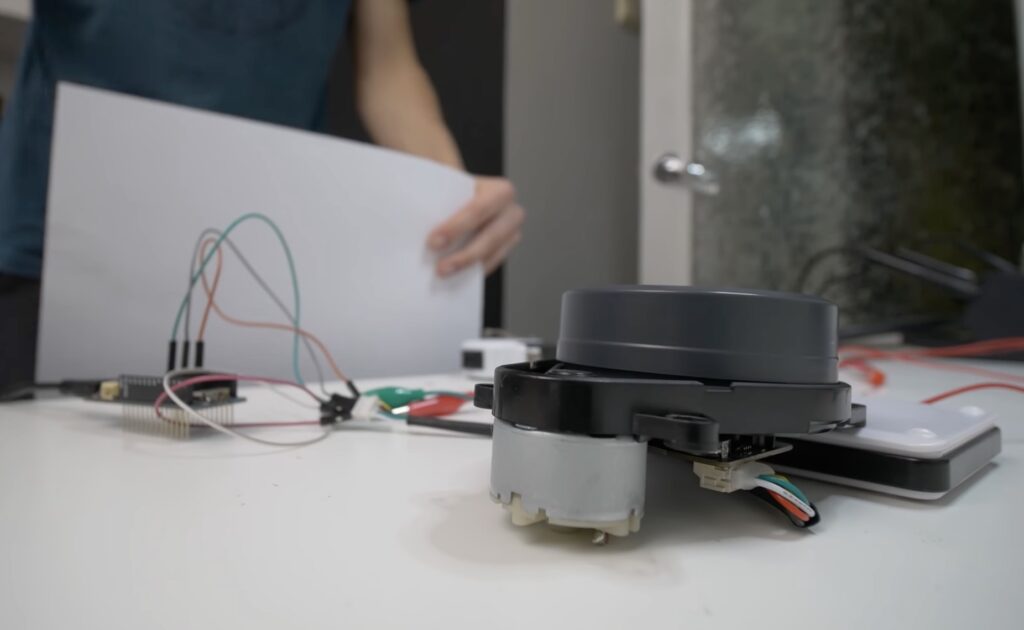

The LIDAR module Bartnik opted to use was fairly simple, as it sent measurements in frames over UART that encoded everything including the sensor’s angle, the distance, and the speed of the device. He then created a simple sketch for the MKR WiFi 1010 that takes advantage of the increased power and connectivity to read values and send them to a host machine for further processing and visualization.

The resulting Python script opens a websocket, which receives the aforementioned data, does some basic filtering, and then displays it within a point-cloud. It also determines the direction in which the robot should move and sends that command back to the MKR board so it can tell the attached Arduino Uno how to move the motors.