Mapping Dance syncs movement and stage lighting using tinyML

Being able to add dynamic lighting and images that can synchronize with a dancer is important to many performances, which rely on both music and visual effects to create the show. Eduardo Padrón aimed to do exactly that by monitoring a performer’s moves with an accelerometer and triggering the appropriate AV experience based on the recognized movement.

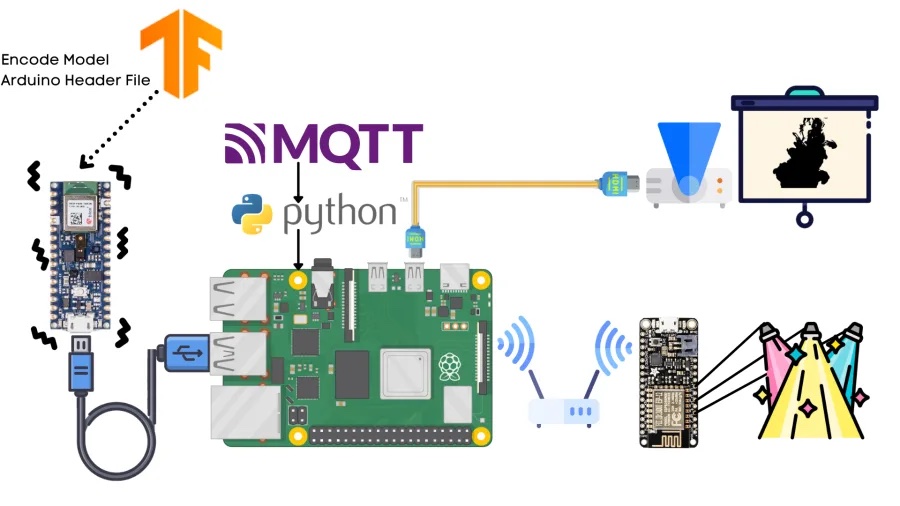

Padrón’s system is designed around a Raspberry Pi 4 running an MQTT server for communication with auxiliary IoT boards. Movement data was collected via a Nano 33 BLE Sense and its onboard accelerometer to gather information and send it to a Google Colab environment. From here, a model was trained on these samples for 600 epochs, achieving an accuracy of around 91%. After deploying this model onto the Arduino, he was able to output the correct gesture over USB where it interacts with the running Python script. Once the gesture is received, the MQTT server publishes the message to any client devices such as an ESP8266 for lighting and plays an associated video or sound.

Padrón plans on adding more features to his system in the future such as Bluetooth® connectivity, kinetic sculptures, and more animation effects. You can read more about his project, which was named a winner in the TensorFlow Lite for Microcontrollers Challenge, on DevPost here.